Scallops are a national treasure. They are a taonga species, a key component of the marine ecosystem and highly valued as kaimoana by customary, recreational and commercial fishers.

But major declines in scallop abundance are being recorded across New Zealand.

This is largely thought to be due to a combination of factors, including overfishing, recruitment limitation and habitat degradation.

The current use of dredges to harvest and survey scallop populations isn’t helping either, with the heavy underwater equipment impacting on both the shellfish and their habitat.

NIWA fisheries scientist Dr James Williams has been working with University of Canterbury experts Professor Richard Green (computer vision) and Associate Professor Michael Hayes (mechatronics) to develop innovative, non-invasive alternatives for surveying scallops and harvesting.

And thanks to a combination of some smart computer algorithms, and thousands upon thousands of little blue scallop eyes, an answer may now be on the horizon.

Independent scientific surveys are essential to the management of a sustainable scallop fishery, says Williams.

The surveys estimate population abundance, distribution and size. Together with information on scallop biology and ecology and the nature and effects of fishing, estimates are generated to determine the number or biomass (combined weight) that can be harvested sustainably.

“Surveys are really the bread and butter of monitoring how our fisheries are doing.”

Using dredges for surveys, however, not only comes at an environmental cost, but is also inefficient because the dredge catches only a proportion of the scallops in its path.

“The idea was to come up with a better, more effective way than dredging. One that doesn’t negatively affect undersize scallops, other organisms and their supporting habitats.”

With the help of NIWA’s Marine Science Advisor Dr Barb Hayden, Williams started working with the Computer Vision team at the University of Canterbury. Several thousand images of scallops were captured using underwater cameras mounted on the university’s underwater drone (ROV). The images were then used by data scientists in designing algorithms to detect the scallops.

“To be honest I was pretty dubious about whether it could be used for scallops,” acknowledges Williams.

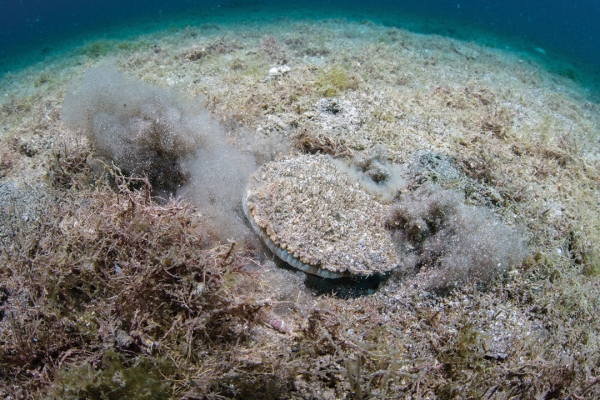

“Our species, Pecten novaezelandiae, is very cryptic. It recesses itself into the seabed, essentially covering itself in a fine layer of sand or silt to hide.

“I thought, oh it’s going to be really difficult to see using cameras.”

This is where those beautiful scallop eyes come squarely into frame. Scallops are bivalve molluscs with two fan-shaped shells connected by a hinge. When the two shells open for the scallop to feed, there are up to 200 eyes staring out from a multitude of sensory tentacles found along the margins.

Scallops use these eyes to detect motion and changes in light intensity that may indicate a predator approaching. Unlike many other bivalves, scallops can also move using jet propulsion by clapping their shells together.

“They can actually lift themselves off the seabed and swim up to a few metres,” says Williams. All of this is extremely relevant when it comes to bringing artificial intelligence (AI) into play.

“It’s those distinctive features, the tentacles and the eyes, which stick out when the scallop is at rest that you can see in the camera imagery,” says Williams.

“The work to date suggests that we can achieve a high rate of detection using this image-based machine learning.”

University of Canterbury Computer Science PhD student, Tim Rensen, explains: “The artificial intelligence is what’s called a convolutional neural network (CNN) which basically slides a bunch of stencils over the camera image. It’s a scallop-shaped stencil and when it goes over a scallop, it fits, the pattern fits and that’s a match for a scallop.”

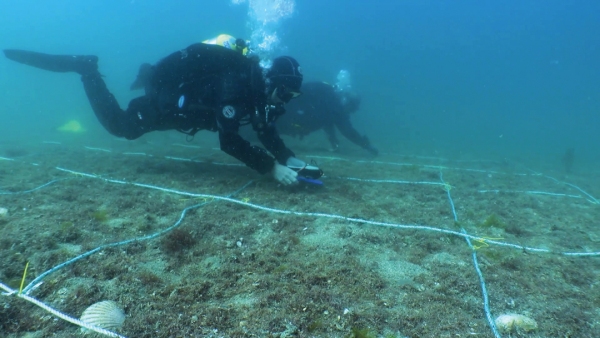

A key step in the development process involves using divers to compare what the computer is finding with what is actually on the seafloor.

Richie Hughes is one of NIWA’s scientific divers involved in this validation step.

“We carefully search and collect all scallops present in the area of the seabed transect that the ROV has filmed and bring them to the surface to be counted and measured,” says Hughes.

“I had to go through every image and annotate individual scallops by drawing a square around every scallop and categorising whether it was live or a shell.

“When we could clearly see the live scallops in the images on screen and the data science guys were like, ‘this is working well, we’re getting a good detection rate’, for me that was the turning point where I thought, ‘maybe there’s something in this’.”

Williams says it has opened his eyes to the potential of imagery and AI to gather so much more data.

“To be able to capture high quality underwater images and automate the process of detecting and measuring the features of interest by training the computer is amazing.”

The work has only just begun. With recent funding approval of $1 million from MBIE’s Endeavour Fund, this ‘Smart Idea’ research project will continue over the next three years.

The first stage is the refinement of the image and AI-based method of accurately surveying scallops. The focus here is on collecting more imagery of scallops in different environments.

Detectability can vary depending on habitat and water clarity and this will need to be programmed into the machine learning system to help finalise detection and measurement of the shellfish.

The second stage is to develop a proof-of-concept, non-invasive harvester that differentiates scallops from other objects on the seabed, assesses whether they are of legal size and picks them up with minimal contact with the surrounding seafloor.

Williams is excited about what the research team has achieved already and where the project is heading. He’s equally enthusiastic about the implications of this data science collaboration for future marine research.

“To be able to overlay images and stitch them together so you build up a photo mosaic image of the entire seabed is going to rapidly advance our understanding of benthic habitats and marine ecosystems.”

He points out that the use of AI is rapidly spreading across NIWA’s research platforms and says the potential benefits are enormous

“It is changing the way we do our science.”

This story forms part of Water and Atmosphere - May 2022, read more stories from this series.