Image Analysis

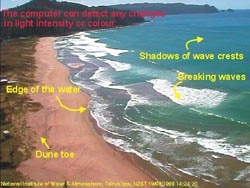

Once we have collected the images and brought them into the lab, the next stage is to make exact measurements off the images. We can train the computer to recognise objects by programming it to look for any change in light intensity or colour in the image.

This way it can find and count breaking waves, vegetation, people, the shadows of un-broken waves and more. Both the time-stacking and averaging techniques described below were pioneered by Rob Holman and Oregon State University.

Averaged images

Some objects cause the computer to get confused. For example, if we want to find the position of the shoreline, or of off-shore sand bars which can be identified by breaking waves, we are not really interested in the pattern made by individual waves but by the general location of breaking.

Unfortunately, the computer is more likely to find the sharp change in light intensity cause by the broken wave crests and miss the shoreline and bars completely. The solution is to average together ten minutes worth of images (600 images). The individual swash at the shoreline disappears, and so do the random wave-breaking events around the bar. The average shoreline and average position of the bar are clearly visible.

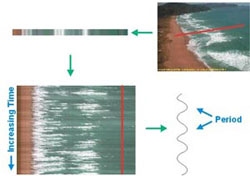

Time-stacks

If we just collect a single snap shot and an averaged image every hour, we are losing a lot of information about the patterns that change over short time periods (for example, waves passing the camera). This is not much help if we are interested in finding wave period (the time between wave crests), or seeing how quickly the waves slow down in shallow water. Unfortunately, if we collected every image over a 10 minute period, our computer system and phone links would quickly overload. The solution is not to collect the whole images but just a small region of interest that we call a time-stack. From the time-stack, we can easily retrieve such things as wave speed and wave period.

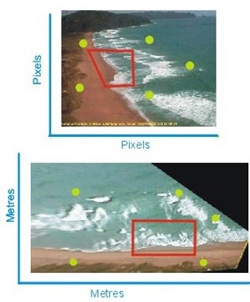

Rectifying

Another problem we encounter when trying to get exact numbers off the images is that measurements off the picture are in pixels (picture elements) and not in metres. Since the view from the camera is sidewise and downward (oblique), the pixels that are far away from the camera represent a greater area than pixels close to the camera. (Tairua beach is not really a wedge shape!) To correct for this we use a number of surveyed ground-control points (see Image of Ground Control survey) and the position of the camera to rectify or reshape the image to a bird’s eye view. More information on this can be obtained from any basic photogrammetry manual.

We also make corrections to compensate for distortions caused by imperfections inherent in all camera lenses.